Why Diffusion Models Don't Memorize: The Role of Implicit Dynamical Regularization in Training

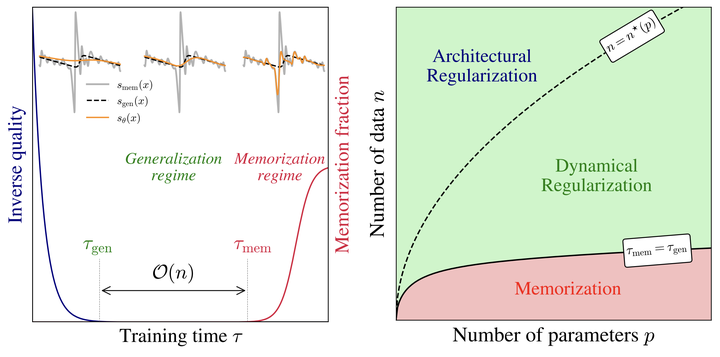

(Left) Illustration of the training dynamics of a diffusion model. (Right) Phase diagram illustrating the different regimes of diffusion models (memorization, architectural regularization or dynamical regularization).

(Left) Illustration of the training dynamics of a diffusion model. (Right) Phase diagram illustrating the different regimes of diffusion models (memorization, architectural regularization or dynamical regularization).

Abstract

Diffusion models trained with sufficiently large number of data generate original and high-quality samples instead of simply copying their training data as the curse of dimensionality suggets. How do they avoid it? We uncover one of the key mechanism answering this question and it lies in an implicit form of regularization in the training dynamics itself, the training time required to start memorizing the samples scales linearly with the dataset size.

Type

Publication

arXiv:2505.17638